Introduction to Natural Language Processing in Python¶

What is Natural Language Processing?¶

- Field of study focused on making sense of language

- Using statistics and computers

- You will learn the basics of NLP

- Topic identification

- Text classification

- NLP applications include:

- Chatbots

- Translation

- Sentiment analysis

- ... and many more!

What exactly are regular expressions?¶

- Strings with a special syntax

- Allow us to match patterns in other strings

- Applications of regular expressions:

- Find all web links in a document

- Parse email addresses, remove/replace unwanted characters

import re

# match a pattern with a string

re.match('abc', 'abcdef')

# match a word with a string

word_regex = '\w+'

re.match(word_regex, 'hi there!')

Common regex patterns¶

| Pattern | Matches | Example |

|---|---|---|

| \w+ | word | 'Magic' |

| \d | digit | 9 |

| \s | space | ' ' |

| .* | wildcard | 'username74' |

| + or * | greedy match | 'aaaaa' |

| \S | not space | 'no_spaces' |

| [a-z] | lowercase group | 'abcdefg' |

Python's re Module¶

- split: split a string on regex

- findall: find all patterns in a string

- search: search for a pattern

- match: match an entire string or substring based on a pattern

- Pattern first, and the string second

- May return an iterator, string, or match object

Practicing regular expressions: re.split() and re.findall()¶

Here, we'll get a chance to write some regular expressions to match digits, strings and non-alphanumeric characters.

Note: It's important to prefix your regex patterns with r to ensure that your patterns are interpreted in the way you want them to. Else, you may encounter problems to do with escape sequences in strings. For example, "\n" in Python is used to indicate a new line, but if you use the r prefix, it will be interpreted as the raw string "\n" - that is, the character "\" followed by the character "n" - and not as a new line.

my_string = 'Let\'s write RegEx! Won\'t that be fun? I sure think so. Can you find 4 sentences? Or perhaps, all 19 words?'

print(my_string)

# Write a pattern to match sentence endings: sentence_endings

sentence_endings = r"[.?!]"

# Split my_string on sentence endings and print the result

print(re.split(sentence_endings, my_string))

# Find all capitalized words in my_string and print the result

capitalized_words = r"[A-Z]\w+"

print(re.findall(capitalized_words, my_string))

# Split my_string on spaces and print the result

spaces = r"\s+"

print(re.split(spaces, my_string))

# Find all digits in my_string and print the result

digits = r"\d+"

print(re.findall(digits, my_string))

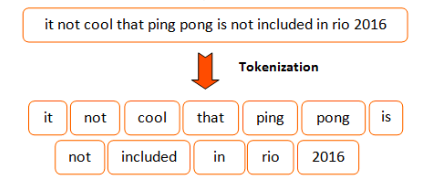

What is tokenization?¶

- Turning a string or document into tokens (smaller chunks). Converts sentences to individual words.

- One step in preparing a text for NLP

- Many different theories and rules

- You can create your own rules using regular expressions

- Some examples:

- Breaking out words or sentences

- Separating punctuation

- Separating all hashtags in a tweet

Why tokenize?¶

- Easier to map part of speech

- Matching common words

- Removing unwanted tokens

- "I don't like Sam's shoes."

- "I", "do", "n't", "like", "Sam", "'s", "shoes", "."

Other nltk tokenizers¶

- sent_tokenize: tokenize a document into sentences

- regexp_tokenize: tokenize a string or document based on a regular expression pattern

- TweetTokenizer: special class just for tweet tokenization, allowing you to separate hashtags, mentions and lots of exclamation points!!!

Word tokenization with NLTK¶

Here, we'll be using the first scene of Monty Python's Holy Grail.

Our job in this exercise is to utilize word_tokenize and sent_tokenize from nltk.tokenize to tokenize both words and sentences from Python strings - in this case, the first scene of Monty Python's Holy Grail.

scene_one = list()

with open('grail.txt', 'r') as f:

text = f.readlines()

for line in text:

if 'SCENE 2' not in str(line):

line = line.rstrip('\n')

scene_one.append(str(line))

else:

break

print(scene_one[:5])

# convert a list to a string

scene_one = ' '.join(scene_one)

scene_one

# Import necessary modules

from nltk.tokenize import sent_tokenize

from nltk.tokenize import word_tokenize

# Split scene_one into sentences: sentences

sentences = sent_tokenize(scene_one)

# Use word_tokenize to tokenize the fourth sentence: tokenized_sent

tokenized_sent = word_tokenize(sentences[3])

# Make a set of unique tokens in the entire scene: unique_tokens

unique_tokens = set(word_tokenize(scene_one))

# Print the unique tokens result

print(unique_tokens)

More regex with re.search()¶

Here, we'll utilize re.search() and re.match() to find specific tokens. Both search and match expect regex patterns, similar to those we defined previously. We'll apply these regex library methods to the same Monty Python text from the nltk corpora.

# Search for the first occurrence of "coconuts" in scene_one: match

match = re.search("coconuts", scene_one)

# Print the start and end indexes of match

print(match.start(), match.end())

# Write a regular expression to search for anything in square brackets: pattern1

pattern1 = r"\[.*\]"

# Use re.search to find the first text in square brackets

print(re.search(pattern1, scene_one))

# Find the script notation at the beginning of the fourth sentence and print it

pattern2 = r"[\w]+:"

print(re.match(pattern2, sentences[3]))

Regex with NLTK tokenization¶

Twitter is a frequently used source for NLP text and tasks. In this exercise, we'll build a more complex tokenizer for tweets with hashtags and mentions using nltk and regex. The nltk.tokenize.TweetTokenizer class gives you some extra methods and attributes for parsing tweets.

Here, we're given some example tweets to parse using both TweetTokenizer and regexp_tokenize from the nltk.tokenize module.

tweets = ['This is the best #nlp exercise ive found online! #python',

'#NLP is super fun! <3 #learning',

'Thanks @datacamp :) #nlp #python']

# Import the necessary modules

from nltk.tokenize import regexp_tokenize

from nltk.tokenize import TweetTokenizer

# Define a regex pattern to find hashtags: pattern1

pattern1 = r"#\w+"

# Use the pattern on the first tweet in the tweets list

hashtags = regexp_tokenize(tweets[0], pattern1)

print(hashtags)

# Write a pattern that matches both mentions (@) and hashtags

pattern2 = r"([@|#]\w+)"

# Use the pattern on the last tweet in the tweets list

mentions_hashtags = regexp_tokenize(tweets[-1], pattern2)

print(mentions_hashtags)

# Use the TweetTokenizer to tokenize all tweets into one list

tknzr = TweetTokenizer()

all_tokens = [tknzr.tokenize(t) for t in tweets]

print(all_tokens)

Non-ascii tokenization¶

In this exercise, we'll practice advanced tokenization by tokenizing some non-ascii based text. We'll be using German with emoji!

german_text = 'Wann gehen wir Pizza essen? 🍕 Und fährst du mit Über? 🚕'

print(german_text)

# Tokenize and print all words in german_text

all_words = word_tokenize(german_text)

print(all_words)

# Tokenize and print only capital words

capital_words = r"[A-ZÜ]\w+"

print(regexp_tokenize(german_text, capital_words))

# Tokenize and print only emoji

emoji = "['\U0001F300-\U0001F5FF'|'\U0001F600-\U0001F64F'|'\U0001F680-\U0001F6FF'|'\u2600-\u26FF\u2700-\u27BF']"

print(regexp_tokenize(german_text, emoji))

print(german_text)

Charting practice¶

We will find and chart the number of words per line in the script using matplotlib.

holy_grail = list()

with open('grail.txt', 'r') as f:

text = f.readlines()

for line in text:

line = line.rstrip('\n')

holy_grail.append(str(line))

import matplotlib.pyplot as plt

# Replace all script lines for speaker

pattern = "[A-Z]{2,}(\s)?(#\d)?([A-Z]{2,})?:"

lines = [re.sub(pattern, '', l) for l in holy_grail]

# Tokenize each line: tokenized_lines

tokenized_lines = [regexp_tokenize(s, "\w+") for s in lines]

# Make a frequency list of lengths: line_num_words

line_num_words = [len(t_line) for t_line in tokenized_lines]

# Plot a histogram of the line lengths

plt.hist(line_num_words)

# Show the plot

plt.show()

Word counts with bag-of-words¶

Bag-of-words

Bag-of-words takes each unique word from all the available text documents, and every word in the vocabulary can be a feature. For each text document a feature vector will be an array where feature values are simply the count of each word in one text. And if some word is not in the text, its feature value is zero. Therefore, the word order in a text is not important, just the number of occurrences.

- Basic method for finding topics in a text

- Need to first create tokens using tokenization

- ... and then count up all the tokens

- The more frequent a word, the more important it might be

- Can be a great way to determine the significant words in a text

Building a Counter with bag-of-words¶

In this exercise, we'll build our first bag-of-words counter using a Wikipedia article, which has been pre-loaded as article.

article = list()

with open('./Wikipedia articles/wiki_text_debugging.txt', 'r') as f:

text = f.readlines()

for line in text:

line = line.rstrip('\n')

article.append(str(line))

article = ' '.join(article)

bs = r"['\\]"

article = re.split(bs, article)

article = ''.join(article)

article

# Import Counter

from collections import Counter

# Tokenize the article: tokens

tokens = word_tokenize(article)

# Convert the tokens into lowercase: lower_tokens

lower_tokens = [t.lower() for t in tokens]

# Create a Counter with the lowercase tokens: bow_simple

bow_simple = Counter(lower_tokens)

# Print the 10 most common tokens

print(bow_simple.most_common(10))

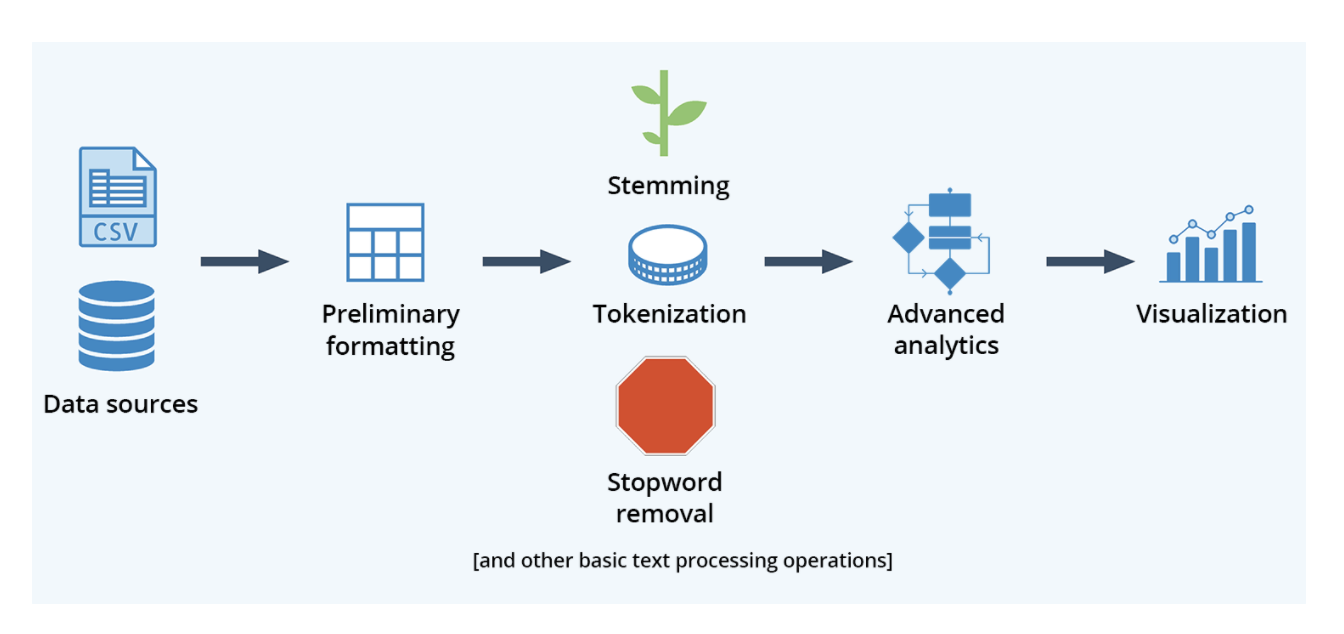

Simple text preprocessing¶

Why preprocess?

- Helps make for better input data

- When performing machine learning or other statistical methods

- Stemming is a process of reducing inflected words to their word stem, i.e root. It doesn’t have to be morphological; you can just chop off the words ends. For example, the word “solv” is the stem of words “solve” and “solved”.

- Lemmatization is another approach to remove inflection by determining the part of speech and utilizing detailed database of the language.

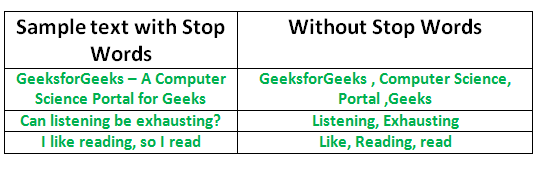

- Stop Words: A stop word is a commonly used word in any natural language (such as “the”, “a”, “an”, “in”).

Examples:

- Tokenization to create a bag of words

- Lowercasing words

- Lemmatization/Stemming

- Shorten words to their root stems

- Removing stop words, punctuation, or unwanted tokens

- Good to experiment with different approaches

Text preprocessing practice¶

Now, we will apply the techniques we've learned to help clean up text for better NLP results. We'll need to remove stop words and non-alphabetic characters, lemmatize, and perform a new bag-of-words on our cleaned text.

from nltk.corpus import stopwords

english_stops = set(stopwords.words('english'))

# Import WordNetLemmatizer

from nltk.stem import WordNetLemmatizer

# Retain alphabetic words: alpha_only (removes punctuation)

alpha_only = [t for t in lower_tokens if t.isalpha()]

# Remove all stop words: no_stops

no_stops = [t for t in alpha_only if t not in english_stops]

# Instantiate the WordNetLemmatizer

wordnet_lemmatizer = WordNetLemmatizer()

# Lemmatize all tokens into a new list: lemmatized

lemmatized = [wordnet_lemmatizer.lemmatize(t) for t in no_stops]

# Create the bag-of-words: bow

bow = Counter(lemmatized)

# Print the 10 most common tokens

print(bow.most_common(10))

Introduction to gensim¶

What is gensim?

- Popular open-source NLP library

- Uses top academic models to perform complex tasks

- Building document or word vectors

- Performing topic identification and document comparison

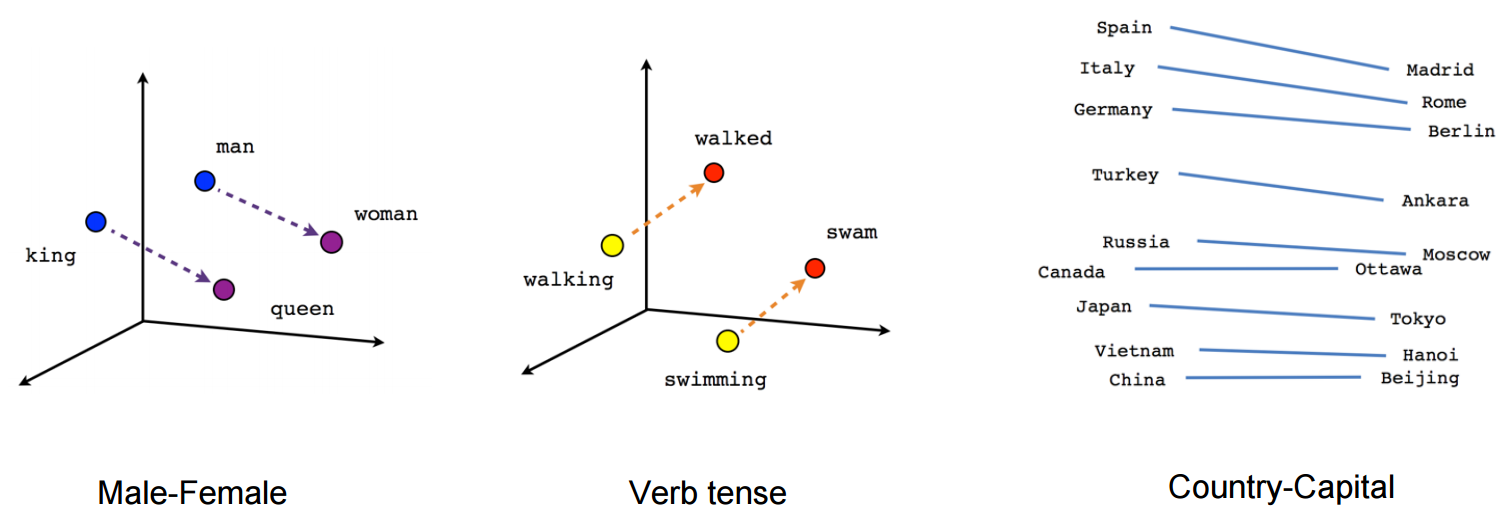

What is a word vector?

The problem with BoW and tf-idf

- Consider the $3$ sentences:

'I am happy''I am joyous''I am sad'

- If we were to compute the similarities,

'I am happy'and'I am joyous'would have the same score as I am happy and I am sad, regardless of how we vectorize it.

- This is because

'happy','joyous'and'sad'are considered completely different words. However, we know that'happy'and'joyous'are more similar to each other than'sad'. This is something a BoW or tf-idf techniques simply cannot capture.

Word vectors (also called word embeddings) represent each word numerically in such a way that the vector corresponds to how that word is used or what it means in a coordinate system. Vector encodings are learned by considering the context in which the words appear. Words that appear in similar contexts will have similar vectors and, are placed closer together in the vector space.

- For example, vectors for "leopard", "lion", and "tiger" will be close together, while they'll be far away from "planet" and "castle". This is great for capturing meaning.

Combining and preprocessing all Wikipedia articles into a list¶

from os import listdir

from os.path import isfile, join

mypath = './Wikipedia articles'

filenames = [f for f in listdir(mypath) if isfile(join(mypath, f))]

filenames

articles = []

for fname in filenames:

article = list()

with open(f'{mypath}/{fname}', 'r') as f:

text = f.readlines()

for line in text:

line = line.rstrip('\n')

article.append(str(line))

article = ' '.join(article)

bs = r"['\\]"

article = re.split(bs, article)

article = ''.join(article)

# Tokenize the article: tokens

tokens = word_tokenize(article)

# Convert the tokens into lowercase: lower_tokens

lower_tokens = [t.lower() for t in tokens]

# Retain alphabetic words: alpha_only (removes punctuation)

alpha_only = [t for t in lower_tokens if t.isalpha()]

# Remove all stop words: no_stops

article = [t for t in alpha_only if t not in english_stops]

articles.append(article)

Creating and querying a corpus with gensim¶

It's time to create our first gensim dictionary and corpus!

- The dictionary is a mapping of words to integer ids

- The corpus simply counts the number of occurences of each distinct word, converts the word to its integer word id and returns the result as a sparse vector.

We'll use these data structures to investigate word trends and potential interesting topics in our document set. To get started, we have will import additional messy articles from Wikipedia, where we will preprocess by lowercasing all words, tokenizing them, and removing stop words and punctuation. Then we will store them in a list of document tokens called articles. We'll need to do some light preprocessing and then generate the gensim dictionary and corpus.

# Import Dictionary

from gensim.corpora.dictionary import Dictionary

# Create a Dictionary from the articles: dictionary with an id for each token

dictionary = Dictionary(articles)

# Select the id for "computer": computer_id

computer_id = dictionary.token2id.get("computer")

# Use computer_id with the dictionary to print the word

print(dictionary.get(computer_id))

# Create a MmCorpus: corpus

corpus = [dictionary.doc2bow(article) for article in articles]

# Print the first 10 word ids with their frequency counts from the fifth document

print(corpus[4][:10])

Gensim bag-of-words¶

Now, we'll use your new gensimcorpus and dictionary to see the most common terms per document and across all documents. We can use our dictionary to look up the terms.

We will use the Python defaultdict and itertools to help with the creation of intermediate data structures for analysis.

defaultdict allows us to initialize a dictionary that will assign a default value to non-existent keys. By supplying the argument int, we are able to ensure that any non-existent keys are automatically assigned a default value of $0$. This makes it ideal for storing the counts of words in this exercise.

itertools.chain.from_iterable() allows us to iterate through a set of sequences as if they were one continuous sequence. Using this function, we can easily iterate through our corpus object (which is a list of lists).

from collections import defaultdict

import itertools

# Save the fifth document: doc

doc = corpus[4]

# Sort the doc for frequency: bow_doc

bow_doc = sorted(doc, key=lambda w: w[1], reverse=True)

# Print the top 5 words of the document alongside the count

for word_id, word_count in bow_doc[:5]:

print(dictionary.get(word_id), word_count)

# Create the defaultdict: total_word_count

total_word_count = defaultdict(int)

for word_id, word_count in itertools.chain.from_iterable(corpus):

total_word_count[word_id] += word_count

# Create a sorted list from the defaultdict: sorted_word_count

sorted_word_count = sorted(total_word_count.items(), key=lambda w: w[1], reverse=True)

print()

# Print the top 5 words across all documents alongside the count

for word_id, word_count in sorted_word_count[:5]:

print(dictionary.get(word_id), word_count)

Finding the Optimum Number of Topics¶

Latent Dirichlet allocation (LDA)¶

LDA is used to classify text in a document to a particular topic. It builds a topic per document model and words per topic model. LDA is a generative statistical model that allows sets of observations to be explained by unobserved groups that explain why some parts of the data are similar. It posits that each document is a mixture of a small number of topics and that each word's presence is attributable to one of the document's topics.

- Each document is modeled as a multinomial distribution of topics and each topic is modeled as a multinomial distribution of words.

- LDA assumes that the every chunk of text we feed into it will contain words that are somehow related. Therefore choosing the right corpus of data is important.

- It also assumes documents are produced from a mixture of topics. Those topics then generate words based on their probability distribution.

Now we can run a batch LDA (because of the small size of the dataset that we are working with) to discover the main topics in our articles.

import warnings

warnings.filterwarnings('ignore')

from gensim.models.ldamodel import LdaModel

import pyLDAvis.gensim as gensimvis

import pyLDAvis

# fit LDA model

topics = LdaModel(corpus=corpus,

id2word=dictionary,

num_topics=5,

passes=10)

# print out first 5 topics

for i, topic in enumerate(topics.print_topics(5)):

print('\n{} --- {}'.format(i, topic))

Visualizing topics with LDAviz¶

The display of inferred topics shown above does is not very interpretable. The LDAviz R library developed by Kenny Shirley and Carson Sievert, is an interactive visualization that's designed help interpret the topics in a topic model fit to a corpus of text using LDA.

Here, we use the Python version of the LDAviz R library pyLDAvis. Two great features of pyLDAviz are its ability to help interpret the topics extracted from a fitted LDA model, but also the fact that it can be easily incorporated within an iPython notebook.

vis_data = gensimvis.prepare(topics, corpus, dictionary)

pyLDAvis.display(vis_data)

Term frequency - inverse document frequency (Tf-idf) with gensim¶

What is tf-idf?

Tf–idf is a numerical statistic that is intended to reflect how important a word is to a document in a collection or corpus. The tf–idf value increases proportionally to the number of times a word appears in the document and is offset by the number of documents in the corpus that contain the word, which helps to adjust for the fact that some words appear more frequently in general.

- Allows you to determine the most important words in each document

- Each corpus may have shared words beyond just stopwords

- These words should be down-weighted in importance

- Ensures most common words don't show up as key words

- Keeps document specific frequent words weighted high, and the common words across the entire corpus weighted low.

Tf-idf formula:

$$ w_{ij} = \mathrm{tf}_{i,j} \ast \log(\frac{N}{\mathrm{df}_{i}})$$- $w_{i,j}$ = tf-idf weight for token $i$ in document $j$

- $\mathrm{tf}_{i,j}$ = number of occurences of token $i$ in document $j$

- $\mathrm{df}_{i}$ = number of documents that contain token $i$

- $N$ = total number of documents

For example if I am an astronomer, sky might be used often but is not important, so I want to downweight that word. Tf-idf weights can help determine good topics and keywords for a corpus with a shared vocabulary.

Tf-idf with Wikipedia¶

Now we will determine new significant terms for our corpus by applying gensim's tf-idf.

# Import TfidfModel

from gensim.models.tfidfmodel import TfidfModel

# Create a new TfidfModel using the corpus: tfidf

tfidf = TfidfModel(corpus)

# Calculate the tfidf weights of doc: tfidf_weights

tfidf_weights = tfidf[doc]

# Print the first five weights

print(tfidf_weights[:5])

print()

# Sort the weights from highest to lowest: sorted_tfidf_weights

sorted_tfidf_weights = sorted(tfidf_weights, key=lambda w: w[1], reverse=True)

# Print the top 5 weighted words

for term_id, weight in sorted_tfidf_weights[:5]:

print(dictionary.get(term_id), weight)

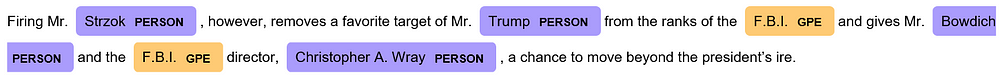

Named Entity Recognition (NER)¶

Named-entity recognition (NER) is a subtask of information extraction that seeks to locate and classify named entities mentioned in unstructured text into pre-defined categories such as person names, organizations, locations, medical codes, time expressions, quantities, monetary values, percentages, etc. NER is used in many fields in Natural Language Processing (NLP), and it can help answer many real-world questions, such as:

- Which companies were mentioned in the news article?

- Were specified products mentioned in complaints or reviews?

- Does the tweet contain the name of a person? Does the tweet contain this person’s location?

NER with NLTK¶

We're now going to have some fun with named-entity recognition! We will look at a scraped news article and use nltk to find the named entities in the article.

import pandas as pd

df = pd.read_table(f'./News articles/uber_apple.txt', header=None)

text = []

for i in range(df.shape[0]):

article = df.values[i][0]

text.append(str(article))

article = ' '.join(text)

article

import nltk

# Tokenize the article into sentences: sentences

sentences = nltk.sent_tokenize(article)

# Tokenize each sentence into words: token_sentences

token_sentences = [nltk.word_tokenize(sent) for sent in sentences]

# Tag each tokenized sentence into parts of speech: pos_sentences

pos_sentences = [nltk.pos_tag(sent) for sent in token_sentences]

# Create the named entity chunks: chunked_sentences

chunked_sentences = nltk.ne_chunk_sents(pos_sentences, binary=True)

# Test for stems of the tree with 'NE' tags

for sent in chunked_sentences:

for chunk in sent:

if hasattr(chunk, "label") and chunk.label() == "NE":

print(chunk)

Charting practice¶

In this exercise, we'll use some extracted named entities and their groupings from a series of newspaper articles to chart the diversity of named entity types in the articles.

We'll use a defaultdict called ner_categories, with keys representing every named entity group type, and values to count the number of each different named entity type. You have a chunked sentence list called chunked_sentences similar to the last exercise, but this time with non-binary category names.

We will use hasattr() to determine if each chunk has a 'label' and then simply use the chunk's .label() method as the dictionary key.

df = pd.read_table(f'./News articles/articles.txt', header=None)

text = []

for i in range(df.shape[0]):

article = df.values[i][0]

text.append(str(article))

articles = ' '.join(text)

# Tokenize the article into sentences: sentences

sentences = nltk.sent_tokenize(articles)

# Tokenize each sentence into words: token_sentences

token_sentences = [nltk.word_tokenize(sent) for sent in sentences]

# Tag each tokenized sentence into parts of speech: pos_sentences

pos_sentences = [nltk.pos_tag(sent) for sent in token_sentences]

# Create the named entity chunks: chunked_sentences

chunked_sentences = nltk.ne_chunk_sents(pos_sentences, binary=False)

# Create the defaultdict: ner_categories

ner_categories = defaultdict(int)

# Create the nested for loop

for sent in chunked_sentences:

for chunk in sent:

if hasattr(chunk, 'label'):

ner_categories[chunk.label()] += 1

# Create a list from the dictionary keys for the chart labels: labels

labels = list(ner_categories.keys())

# Create a list of the values: values

values = [ner_categories.get(v) for v in labels]

# Create the pie chart

fig, ax = plt.subplots(figsize=(10, 5), subplot_kw=dict(aspect="equal"))

explode = (0.015, 0.05, 0.025, 0.1, 0.2)

wedges, texts, autotexts = ax.pie(values, labels=labels, autopct='%1.1f%%', startangle=140,

textprops=dict(color="w"), explode=explode, shadow=True)

ax.legend(wedges, labels,

title="NER Labels",

loc="center left",

bbox_to_anchor=(1, 0.2, 0.1, 1))

plt.setp(autotexts, size=12, weight="bold")

ax.set_title("Named Entity Recognition")

plt.show()

Introduction to SpaCy¶

What is SpaCy?

- NLP library similar to gensim , with different implementations

- Focus on creating NLP pipelines to generate models and corpora

- Open-source, with extra libraries and tools

Why use SpaCy for NER?

- Easy pipeline creation

- Different entity types compared to nltk

- Informal language corpora

- Easily find entities in Tweets and chat messages

SpaCy entity types:¶

| TYPE | DESCRIPTION |

|---|---|

| PERSON | People, including fictional. |

| NORP | Nationalities or religious or political groups. |

| FAC | Buildings, airports, highways, bridges, etc. |

| ORG | Companies, agencies, institutions, etc. |

| GPE | Countries, cities, states. |

| LOC | Non-GPE locations, mountain ranges, bodies of water. |

| PRODUCT | Objects, vehicles, foods, etc. (Not services.) |

| EVENT | Named hurricanes, battles, wars, sports events, etc. |

| WORK_OF_ART | Titles of books, songs, etc. |

| LAW | Named documents made into laws. |

| LANGUAGE | Any named language. |

| DATE | Absolute or relative dates or periods. |

| TIME | Times smaller than a day. |

| PERCENT | Percentage, including ”%“. |

| MONEY | Monetary values, including unit. |

| QUANTITY | Measurements, as of weight or distance. |

| ORDINAL | “first”, “second”, etc. |

| CARDINAL | Numerals that do not fall under another type. |

Comparing NLTK with spaCy NER¶

We'll now see the results using spaCy's NER annotator. To minimize execution times, we'll specify the keyword arguments tagger=False, parser=False, matcher=False when loading the spaCy model, because we only care about the entity in this exercise.

df = pd.read_table(f'./News articles/uber_apple.txt', header=None)

text = []

for i in range(df.shape[0]):

article = df.values[i][0]

text.append(str(article))

article = ' '.join(text)

# Import spacy

import spacy

# Instantiate the English model: nlp

nlp = spacy.load('en_core_web_sm', tagger=False, parser=False, matcher=False)

# Create a new document: doc

doc = nlp(article)

# Print all of the found entities and their labels

for ent in doc.ents:

print(f'{ent.label_}:', ent.text)

Vizualize NER in raw text¶

Let’s use displacy.render to generate the raw markup.

from spacy import displacy

displacy.render(doc, jupyter=True, style='ent')

Multilingual NER with polyglot¶

What is polyglot?

- NLP library which uses word vectors

- Why polyglot ?

- Vectors for many different languages

- More than 130!

French NER with polyglot I¶

In this exercise and the next, we'll use the polyglot library to identify French entities. The library functions slightly differently than spacy, so we'll use a few of the new things to display the named entity text and category.

df = pd.read_table(f'./News articles/french.txt', header=None)

text = []

for i in range(df.shape[0]):

article = df.values[i][0]

text.append(str(article))

article = ' '.join(text)

from polyglot.text import Text

# Create a new text object using Polyglot's Text class: txt

txt = Text(article)

# Print the type of each entity

txt.entities

French NER with polyglot II¶

Here, we'll complete the work we began in the previous exercise.

Our task is to use a list comprehension to create a list of tuples, in which the first element is the entity tag, and the second element is the full string of the entity text.

# Create the list of tuples: entities

entities = [(ent.tag, ' '.join(ent)) for ent in txt.entities]

# Print the entities

print(entities)

French NER with polyglot lll¶

We'll continue our exploration of polyglot now with some French annotation.

Our specific task is to determine how many of the entities contain the words "Charles" or "Cuvelliez" - these refer to the same person in different ways!

# Initialize the count variable: count

count = 0

# Iterate over all the entities

for ent in txt.entities:

# Check whether the entity contains 'Márquez' or 'Gabo'

if "Charles" in ent or "Cuvelliez" in ent:

# Increment count

count += 1

# Print count

print(count)

# Calculate the percentage of entities that refer to "Gabo": percentage

percentage = count * 1.0 / len(txt.entities)

print(f'{round(percentage,2)*100}%')

Classifying fake news using supervised learning with NLP¶

Supervised learning with NLP

- Need to use language instead of geometric features

- scikit-learn : Powerful open-source library

- How to create supervised learning data from text?

- Use bag-of-words models or tf-idf as features

df = pd.read_csv('fake_or_real_news.csv').drop('Unnamed: 0', axis=1)

df.head()

CountVectorizer for text classification¶

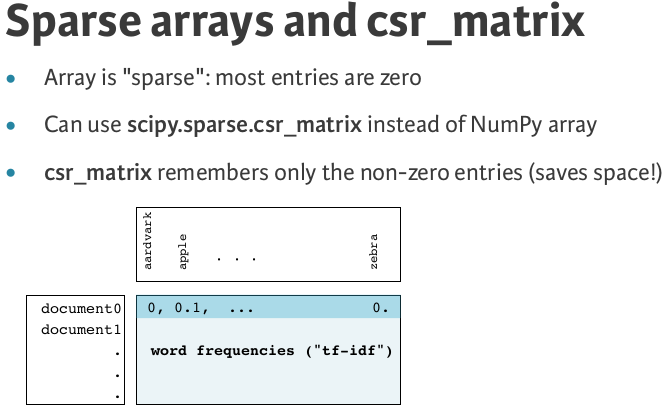

CountVectorizer converts a collection of text documents to a matrix of token counts. This implementation produces a sparse representation of the counts using scipy.sparse.csr_matrix.

It's time to begin building our text classifier!

In this exercise, we'll use pandas alongside scikit-learn to create a sparse text vectorizer we can use to train and test a simple supervised model. To begin, we'll set up a CountVectorizer and investigate some of its features.

# Import the necessary modules

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.model_selection import train_test_split

# Create training and test sets

X_train, X_test, y_train, y_test = train_test_split(df['text'], df['label'], test_size=0.33, random_state=53)

# Initialize a CountVectorizer object: count_vectorizer

count_vectorizer = CountVectorizer(stop_words='english')

# Transform the training data using only the 'text' column values: count_train

count_train = count_vectorizer.fit_transform(X_train)

# Transform the test data using only the 'text' column values: count_test

count_test = count_vectorizer.transform(X_test)

# Print the first 10 features of the count_vectorizer

print(count_vectorizer.get_feature_names()[:10])

TfidfVectorizer for text classification¶

Similar to the sparse CountVectorizer created in the previous exercise, we'll work on creating tf-idf vectors for our documents. We'll set up a TfidfVectorizer and investigate some of its features.

# Import TfidfVectorizer

from sklearn.feature_extraction.text import TfidfVectorizer

# Initialize a TfidfVectorizer object: tfidf_vectorizer

tfidf_vectorizer = TfidfVectorizer(stop_words='english', max_df=0.7)

# Transform the training data: tfidf_train

tfidf_train = tfidf_vectorizer.fit_transform(X_train)

# Transform the test data: tfidf_test

tfidf_test = tfidf_vectorizer.transform(X_test)

# Print the first 10 features

print(tfidf_vectorizer.get_feature_names()[:10])

# Print the first 5 vectors of the tfidf training data

print('\n', tfidf_train.A[:10])

Training and testing a NLP classification model with scikit-learn¶

Naive Bayes classifier

- Commonly used for testing NLP classification problems

- Basis in probability

- Given a particular piece of data, how likely is a particular outcome?

Examples:

- If the plot has a spaceship, how likely is it to be sci-fi?

- Given a spaceship and an alien, how likely now is it sci-fi?

- Each word from

CountVectorizeracts as a feature - Naive Bayes: Simple and effective

Training and testing the "fake news" model with CountVectorizer¶

Now it's time to train the "fake news" model using the features we identified and extracted. In this first exercise we'll train and test a Naive Bayes model using the CountVectorizer data.

# Import the necessary modules

from sklearn.naive_bayes import MultinomialNB

from sklearn import metrics

from sklearn.metrics import plot_confusion_matrix, confusion_matrix

# Instantiate a Multinomial Naive Bayes classifier: nb_classifier

nb_classifier = MultinomialNB()

# Fit the classifier to the training data

nb_classifier.fit(count_train, y_train)

# Create the predicted tags: pred

pred = nb_classifier.predict(count_test)

# Calculate the accuracy score: score

score = metrics.accuracy_score(y_test, pred)

print(f'Accuracy = {score}')

# Calculate and plot the confusion matrix: cm

plot_confusion_matrix(nb_classifier, count_test, y_test, normalize='true', labels=['FAKE', 'REAL']) # doctest: +SKIP

plt.show()

Training and testing the "fake news" model with TfidfVectorizer¶

Now that we have evaluated the model using the CountVectorizer, we'll do the same using the TfidfVectorizer with a Naive Bayes model.

# Create a Multinomial Naive Bayes classifier: nb_classifier

nb_classifier = MultinomialNB()

# Fit the classifier to the training data

nb_classifier.fit(tfidf_train, y_train)

# Create the predicted tags: pred

pred = nb_classifier.predict(tfidf_test)

# Calculate the accuracy score: score

score = metrics.accuracy_score(y_test, pred)

print(f'Accuracy = {score}')

# Calculate and plot the confusion matrix: cm

plot_confusion_matrix(nb_classifier, tfidf_test, y_test, normalize='true', labels=['FAKE', 'REAL']) # doctest: +SKIP

plt.show()

Improving our model l¶

Our job in this exercise is to test a few different alpha levels using the Tfidf vectors to determine if there is a better performing combination.

import numpy as np

# Create the list of alphas: alphas

alphas = np.arange(0, 1, .1)

# Define train_and_predict()

def train_and_predict(alpha):

# Instantiate the classifier: nb_classifier

nb_classifier = MultinomialNB(alpha=alpha)

# Fit to the training data

nb_classifier.fit(tfidf_train, y_train)

# Predict the labels: pred

pred = nb_classifier.predict(tfidf_test)

# Compute accuracy: score

score = metrics.accuracy_score(y_test, pred)

return score

# Iterate over the alphas and print the corresponding score

for alpha in alphas:

print('Alpha: ', alpha)

print('Score: ', train_and_predict(alpha))

print()

# Create a Multinomial Naive Bayes classifier with the best alpha

nb_classifier = MultinomialNB(alpha=0.1)

# Fit the classifier to the training data

nb_classifier.fit(tfidf_train, y_train)

# Create the predicted tags: pred

pred = nb_classifier.predict(tfidf_test)

# Calculate the accuracy score: score

score = metrics.accuracy_score(y_test, pred)

print(f'Accuracy = {score}')

# Calculate and plot the confusion matrix: cm

plot_confusion_matrix(nb_classifier, tfidf_test, y_test, normalize='true', labels=['FAKE', 'REAL']) # doctest: +SKIP

plt.show()

Improving our model ll¶

Our job in this exercise is to test a few different setting using the TfidfVectorizer. Specifically we will be changing the analyzer (character or word) and ngram_range.

- Characters are the basic symbols that are used to write or print a language. For example, the characters used by the English language consist of the letters of the alphabet, numerals, punctuation marks and a variety of symbols (e.g., the dollar sign and the arithmetic symbols).

- An n-gram is a contiguous sequence of $n$ items from a given sample of text or speech. The items can be phonemes, syllables, letters, words or base pairs according to the application. The n-grams typically are collected from a text or speech corpus.

analyzers = ['word', 'char', 'char_wb']

ngrams = [(1,1), (1,2), (1,3), (2,2), (2,3), (3,3)]

params = [analyzers, ngrams]

print('Parameters:')

print(params)

p_list = list(itertools.product(*params))

print('\nSearch space size = ', len(p_list))

print('\nParameters Space:', p_list)

for i in p_list:

analyzer = i[0]

ngram = i[1]

# Initialize a TfidfVectorizer object: tfidf_vectorizer

tfidf_vectorizer = TfidfVectorizer(analyzer=analyzer, stop_words='english', max_df=0.7, ngram_range=ngram)

# Transform the training data: tfidf_train

tfidf_train = tfidf_vectorizer.fit_transform(X_train)

# Transform the test data: tfidf_test

tfidf_test = tfidf_vectorizer.transform(X_test)

# Create a Multinomial Naive Bayes classifier: nb_classifier

nb_classifier = MultinomialNB(alpha=0.1)

# Fit the classifier to the training data

nb_classifier.fit(tfidf_train, y_train)

# Create the predicted tags: pred

pred = nb_classifier.predict(tfidf_test)

# Calculate the accuracy score: score

score = metrics.accuracy_score(y_test, pred)

print(f'Analyzer: {analyzer} \nngram: {ngram} \nAccuracy = {round(score,2)}')

# Calculate and plot the confusion matrix: cm

plot_confusion_matrix(nb_classifier, tfidf_test, y_test, normalize='true', labels=['FAKE', 'REAL']) # doctest: +SKIP

plt.show()